How I Use Free Tesseract OCR to Convert PDF into Editable Text for Incremental Reading

1. Split PDF into images

2. Use Xnview to crop out PDF headers and footers

3. Use Tesseract OCR to convert images to txt

4. Combine individual txt files into one big txt file

5. Remove PDF line breaks

6. Import into SuperMemo

I wrote a similar guide called Digitizing Learning Materials for Anki/SuperMemo 2 years ago. The OCR mentioned are commercial products. In this article I’ll share how I use the free Tesseract OCR to achieve similar results without spending a penny. Also, I’ll use How Learning Happens as an example (It’s a great read by the way). I have access to Taylor & Francis Group and downloaded the true PDF (highlight-able and select-able) there.

Tools needed:

1. Git for Windows (Optional; I’ve included commands for Windows' cmd)

2. Xnview

The Git for Windows and Xnview download and installation guides are self-explanatory. For Tesseract OCR, go to Tesseract at UB Mannheim and download the tesseract-ocr-w64-setup-v5.0.0-alpha.20200328.exe (64 bit)

Why Tesseract? Tesseract is under active development. It’s probably the most widely-used free OCR engine. And because “Tesseract 4 adds a new neural net (LSTM) based OCR engine” sounds high-quality results.

Why alpha version? Because as stated:

[W]e think that the latest version 5.0.0-alpha is better for most Windows users in many aspects (functionality, speed, stability).

1. Split PDF into images

Update: Special thanks to u/alessivs for providing the first solution. featuredImage:

Xpdf command line tools

Head over to XpdfReader to download the Xpdf command line tools (Windows 32/64-bit).

-

Unzip and put the folder

xpdf-tools-win-4.02toC:\Program Files (x86) -

Set System Environment Variable: add

C:\Program Files (x86)\xpdf-tools-win-4.02\bin64(orC:\Program Files (x86)\xpdf-tools-win-4.02\bin32if you’re on Windows 32-bit) to path -

In the folder where your PDF is located, press

Alt + D, typecmdand press Enter to open the command prompt window. -

Paste and execute the following command (the -r argument is for DPI):

pdftopng -r 300 HowLearningHappens.pdf HowLearningHappens

PS: If you have Windows Subsystem for Linux, you may use the following command to split PDF into images:

pdftoppm HowLearningHappens.pdf HowLearningHappens -png

2. Use Xnview to crop out PDF headers and footers

After splitting I have 329 images:

Before cropping out PDF headers and footers, I’d remove all unnecessary pages like HowLearningHappens-001.png (cover), HowLearningHappens-005.png (logo) or HowLearningHappens-021.png (logo).

If you open any image:

You can see the header and footer are not needed to be OCR-ed. If you don’t crop them out, they will get OCR-ed and get interspersed in the text, which is time-consuming to remove afterwards.

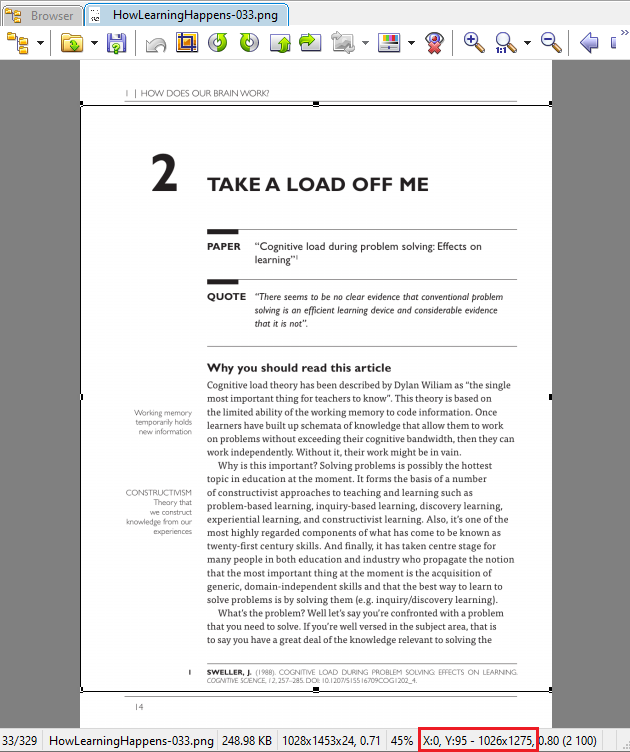

I’d open one image in Xnview to get a reference dimension (template) like the following:

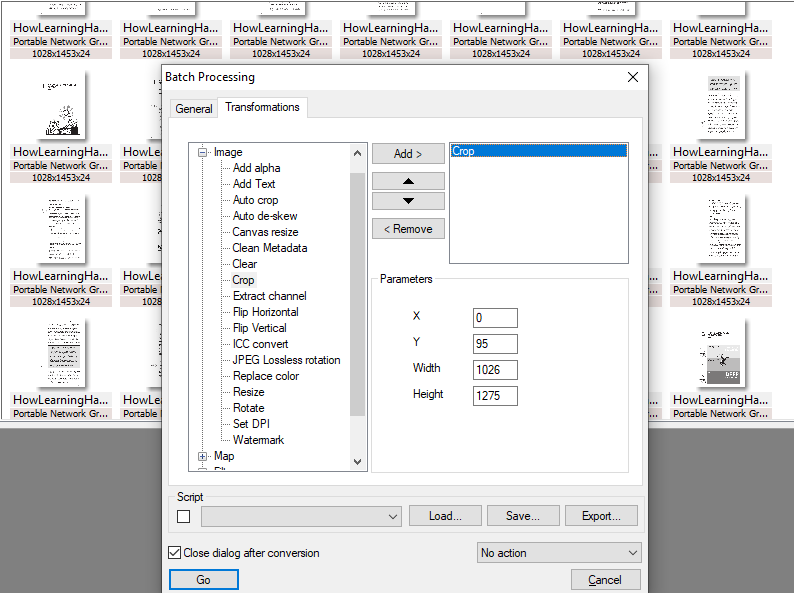

After getting the reference dimension, I can use Xnview’s batch processing to do the same for every image:

At this point all the images are ready to be fed to Tesseract OCR.

3. Use Tesseract OCR to convert images to txt

PS: Tesseract OCR is a command-line program.

In the folder where your images are located, press Alt + D, type cmd and press Enter to open the command prompt window. Then execute this command:

for /r %i in (*) do tesseract %i %i -c preserve_interword_spaces=1

PS: For git bash:

for i in *.png ; do tesseract $i $i -c preserve_interword_spaces=1; done;

Basically this command loops through all the images for tesseract to consume. I’ve added the argument preserve_interword_spaces=1. I’m not sure if it makes any difference. For further arguments please see TESSERACT(1) Manual Page

This processing takes quite some time, depending on how many images you feed into Tesseract of course. For the book How Learning Happens (329 images) I think it took around half an hour.

4. Combine individual txt files into one big txt file

To combine all the individual Tesseract output into one text file, run the following command in cmd:

copy /b HowLearningHappens-*.txt HowLearningHappens_All.txt

PS: For git bash:

cat HowLearningHappens-*.txt >> HowLearningHappens_All.txt

However, before actually doing so, I recommend testing the concatenating order. Cmd: for /r %i in (*) do echo %i; git bash: echo HowLearningHappens-*.txt. They may concatenate according to numerical or lexicographic order. You’d want numerical order. With lexicographic order, the concatenating order can get messed up, i.e., 1, 2, 10, 15, 20 will become 1, 10, 15, 2, 20 and thus, the content in the output file won’t make sense.

After making sure the order is correct, combine all the txt files into one file with the above command.

5. Remove PDF line breaks

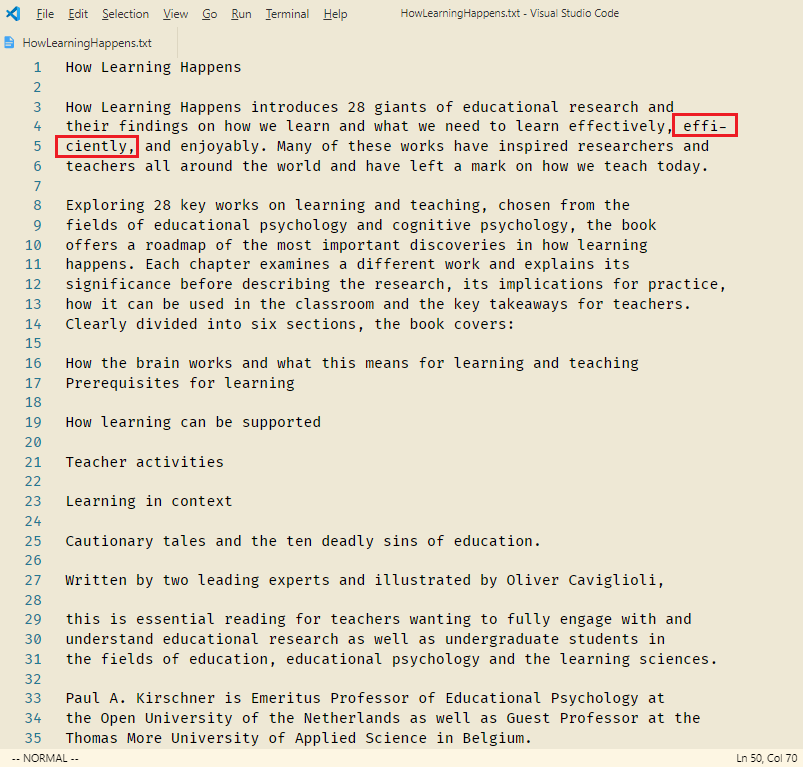

This is the result I get:

As you can see, the result is filled with incorrect line breaks. Also, there is word-split problem (e.g., efficiently is split into effi-ciently). These aren’t the fault of Tesseract, but rather, how the original PDF is structured. I use Line Break Removal Tool to remove line breaks only (not paragraph breaks). For the latter word-split problem, you may use the following regex to remove such annoyance:

|

|

What about images?

If you want, you can open the PDF, screenshot the figures, copy and paste them back to their corresponding place in the text. If you do this, you don’t have to open the PDF for those figures and charts during Incremental Reading. Personally, I don’t do this. I just open the PDF during the actual reading.

How is the OCR quality compared to ABBYY FineReader?

PS: I have only used Tesseract with true PDF so I can’t speak for scanned PDF. Also, I didn’t use an identical PDF to test both programs. This is purely anecdotal.

I find the quality difference negligible. Tesseract is only slightly worse than ABBYY FineReader. For example, as a might become asa or I become l, which never happened in ABBYY FineReader. Other than these minor errors, there isn’t really any significant difference (maybe I can’t tell). Certainly Tesseract is good enough that isn’t worth forking out $200 for ABBYY FineReader.

If you’re doing this for your calculus textbook however, this is not going to work. OCR won’t work for symbols and equations. However, you may still proceed to use Tesseract for paragraph or explanatory texts, then manually target those Maths-heavy elements with reference to How To Use LaTeX In SuperMemo.

Conclusion

This may look like a lot of installation and steps, but once you’re set up and get used to your particular workflow, it’ll become second nature. I hope this has been helpful: OCR has made dealing with PDF so much easier.